Cloud computing is emerging or one would rather say has long time emerged as the panacea for on-demand scalability and elasticity of IT resources and organizations around the world are taking advantage of it at an unprecedented speed. Such scalability comes with a price and cloud resources that are not managed properly can add up to significant costs that can seriously affect the business functionality and the very reason for deploying resources in the cloud. Solid DevOps design/architecture followed by consistent implementation can optimize the use of cloud resources. There are however, factors that are not within the organization’s control and an EDoS or Economic Denial of Sustainability attack becomes a reality. Cloud services costs are typically driven by usage and keeping a resource artificially busy can be a very effective way of rendering that service unprofitable or too expensive to operate.

Cloud-based SIEMs are becoming the new reality with the traditional vendors cloudifying their existing on-prem solutions and native, born-in-the-cloud offerings like Azure Sentinel taking the SIEM market by storm. With some exceptions, the pricing model for such SIEM solutions is based on volume of logging data ingested on daily/monthly basis. This makes perfect sense from a vendor perspective as they have to handle the ingestion, storage and processing of the log data. The customer also can take advantage of this model by continuously optimizing their log data ingestion in order to achieve the perfect balance between the log data ingestion and the potential value of those logs (from a cyberthreat visibility perspective).

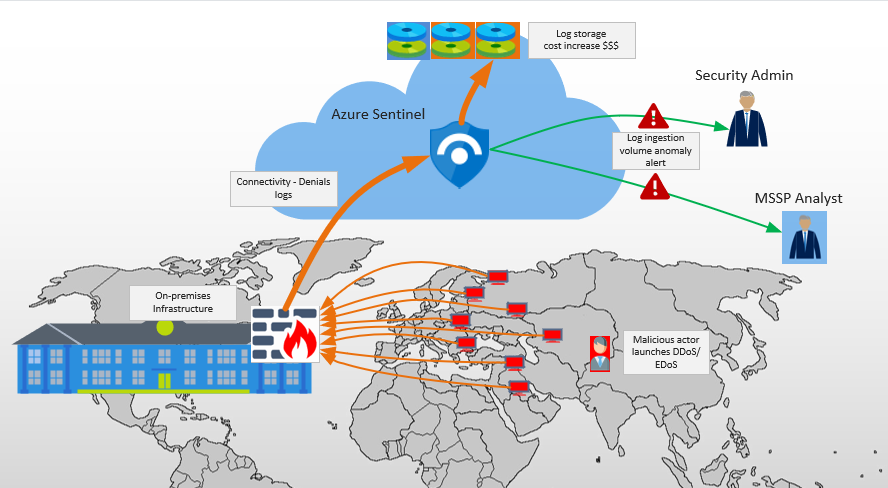

In the last few months we had a few situations where some of our Azure Sentinel customers have been subject to EDoS attacks and we deployed mitigation controls to minimize their impact. In all of these cases, the scenario was identical:

- Malicious actor initiates an extensive scan against the customer’s public IP addresses from a large number of remote hosts

- The scan hits the customer’s firewall triggering a large number of denials in the firewall logs

- The firewall logs are sent to the Azure Sentinel SIEM causing a large increase in the log volume ingestion

- The customer’s Azure usage bill increases significantly

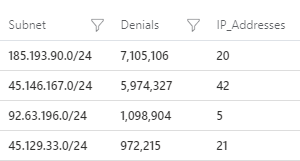

The most recent one was detected on October 8th and we identified 4 subnets causing a large volume of denials messages in 24 hours:

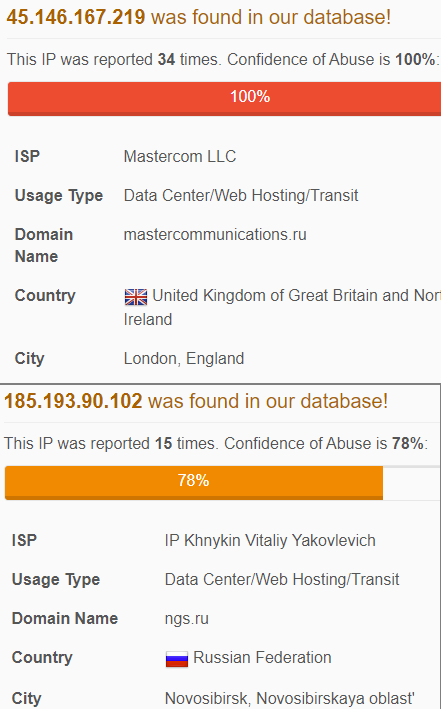

A quick check on AbuseIPDb shows clearly that this attack affects a wide range of organizations:

To put some cost numbers behind these denials log, one single log entry (for this particular type of firewall) costs around $0.000004 and that seems negligible, but when adding them up for a sustained attack for 30 days, they end up to about $2,000 increase in log ingestion costs. For a very large organization this might not be an issue but for SMBs, these are not numbers to be ignored.

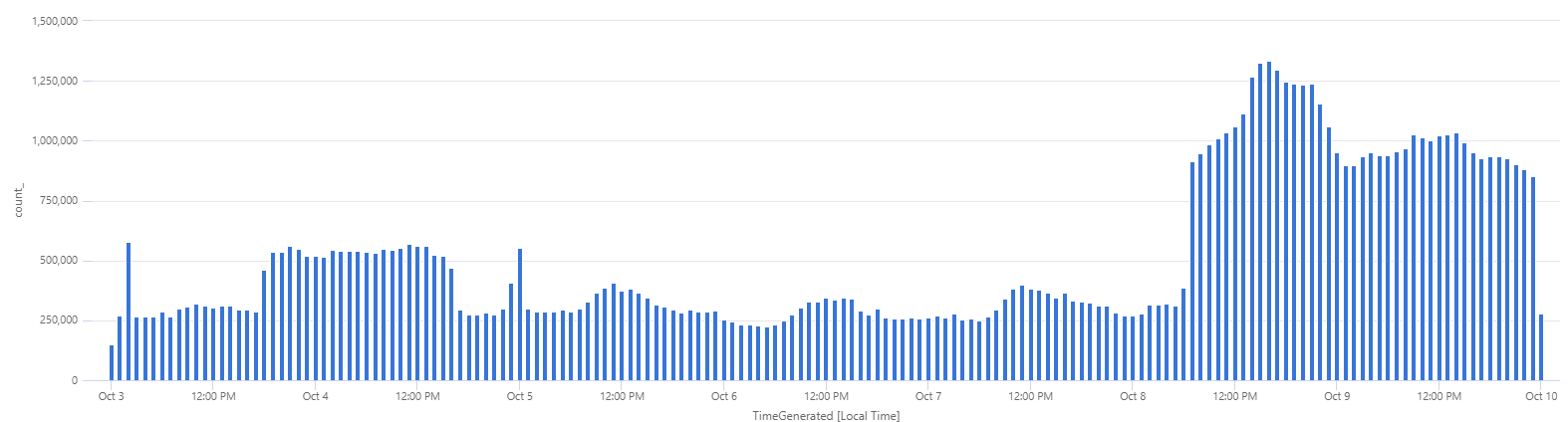

One can quickly visualize this in Sentinel either by number of denial log entries:

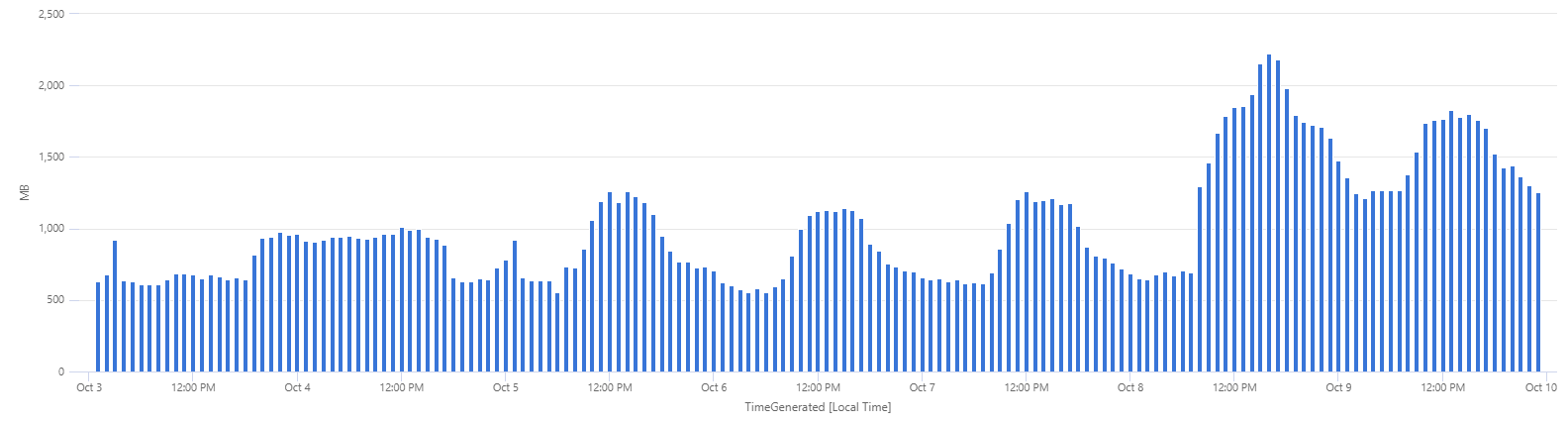

or by volume of logs (that translates directly in $):

A typical organization has very little control on what remote attackers can do so what options are there to mitigate the consequences of such attack?

Based on our Azure Sentinel practice (though this is applicable to any kind of SIEM, be that on-premises or in the cloud) we recommend the following:

- Optimize the log ingestion – Understand the value that each type of log entry brings and if it is not something that is actionable, evaluate if the $ spent on log ingestion/licensing are worth the information obtained from those log entries. In this case, how much value do you get from log entries recording denials of external hosts? During our Sentinel implementations we go with our customers through this exercise for each type of log source.

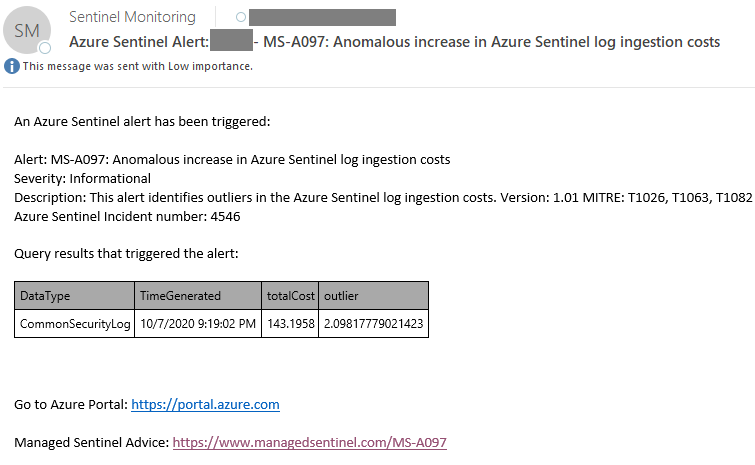

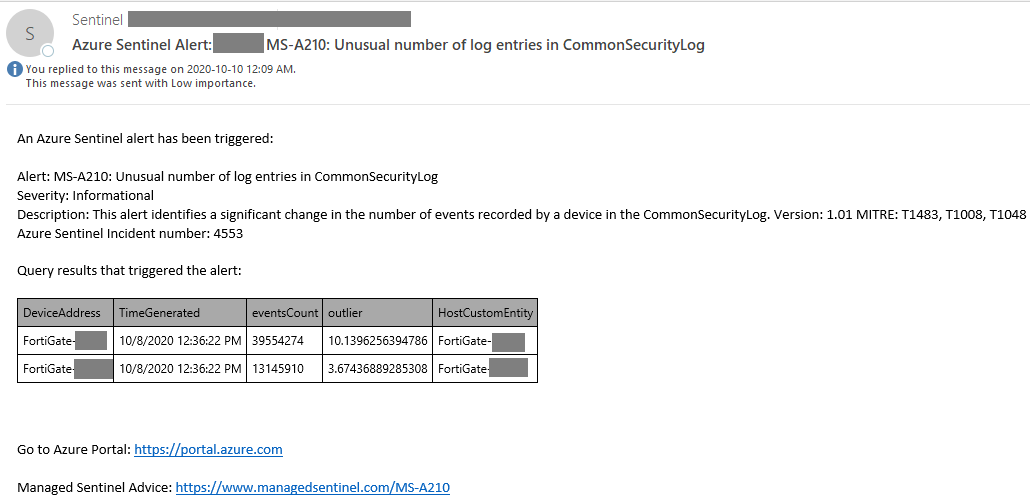

- Continuously monitor the volume of logs, deploy alerts that can identify outliers in log volume and cloud costs. In Azure Sentinel, the Kusto Query Language (KQL) provides built-in support for anomaly detection, allowing us to deploy multiple alerts that detect outliers in data sets such as firewall denials, inbound/outbound traffic, number of website errors, number of logins, increase in log ingestion costs, etc. The only limit for such detections is the availability of data that can be used to build a baseline.

- Design a process to minimize the effect of such attacks – in this case, one can create a firewall rule that would block those subnets without creating log entries for them or send these logs to a cheap, temporary log collector (i.e. a basic syslog server with some storage to collect the data in case is needed).

- Ideally, implement SOAR to detect such situations and automatically configure firewall rules to apply the suggestion no. 3. (Sentinel can do this as long as the firewall supports automation or rules that can be updated dynamically, such as the Palo Alto EDLs).

- Impose a cap on the daily log ingestion. This is debatable as it may lead to some logging data being discarded, but it could be used by organizations with a very tight budget. The daily cap could be several orders of magnitude higher just so the logging will be stopped only on really extreme situations.

While in theory a cloud-based SIEM (depends on how solid the cloud backend is) is affected just by the cost increase, an on-premises one can end up with both a need to increase the licensing (that could be based by EPS or GB/day) and a hit on the performance as more that has to be analyzed.

Examples of alerts from Azure Sentinel:

Log ingestion cost increase anomaly detection:

Number of log entries anomaly detection in CommonSecurityLog (the Sentinel table that stores the log entries using Common Event Format):

Such detections can also protect against internal anomalous log sources. In one instance, a misconfigured network device caused a large spike in log volume as it was attempting to authenticate against an Internet-based AAA server while being blocked by the firewall. This type of events are typically not subject to intense scrutiny (low value from a security perspective – they are rather operational issues) so they can go under the radar for a long time.