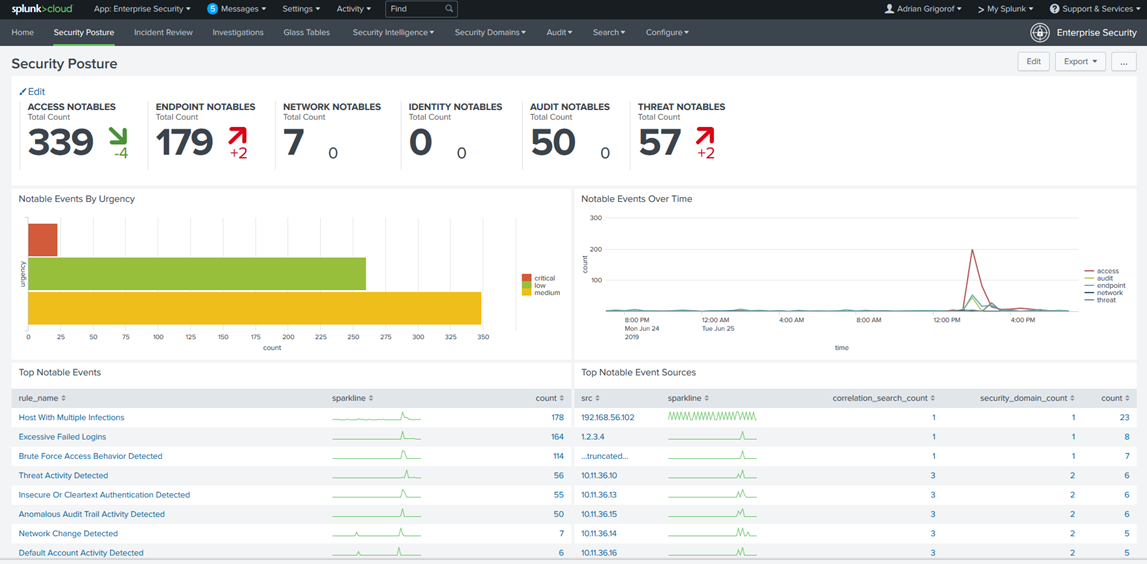

This article reflects the TASK Jun 26, 2019 Presentation: Big changes in SIEMs: A comparison of cloud-born and traditional options

Ask any cybersecurity professional to quickly tell what SIEM stands for any most will slightly hesitate before coming up with “Security Information and Events Management”, a rather long name that is just the final result of a journey that started with SIM (Security Information Management), SEM (Security Events Management), SELM (Security Events and Log Management) and sometimes the misspelled version that shows up in many documents as SEIM.

The very term SIEM was coined by Mark Nicolett and Amrit Williams of Gartner in 2005.

Mark Nicolett and Amrit Williams

* * *

The evolution of the SIEM term is just a natural effect of the effort throughout the years to crystalize the role of the security control that deals with collection of security information that comes up in the form of logs and events and manage them from a single interface. In fact, recently I have noticed that many vendors and cybersecurity professionals talk about security “signals” so the SIEM might just well become SEISM – Security Events, Information and Signals Management.

The idea of collecting logs and using them for the analysis and troubleshooting of various appliances, servers and applications have been around for a long time. The ubiquitous Syslog was developed as a helper tool for the Sendmail project in the 1980s by Eric Allman and of course, at the time it was part of the Unix environment.

Eric Allman

Syslog was quickly adapted as the logging standard for many operating systems, network and security appliances though to this day, operating systems such as Windows are still not “on board”.

* * *

Around mid 1990s, the syslog started to become the de facto collector of “logs” from many servers and appliances. The emergence of Internet and its risks (read “crackers” not to be confused with “hackers”, the benign tinkerers of all that’s technology) started to push the logging process to a more visible role. However, at the time, the tools of choice were still Unix-based search utilities such as grep (its name comes from g/re/p aka “globally search a regular expression and print”) developed by Ken Thompson in 1974.

Ken Thompson

Those that needed more advanced systems had to build their own using whatever programming tools they were comfortable with. I remember in the late ’90s building a web interface using CGI and Perl scripts that allowed me to query web browsing data from Microsoft Proxy servers and generate reports on traffic statistics.

Fast forward 10 years and the mid 2000s brings us in a totally new environment. The Internet is a fact of life, e-commerce is the next big thing and the dot-com crash of the early 2000s woke up regulators from both private and government sectors. PCI, Sarbanes-Oxley (SOX), HIPAA are now forcing organizations to really keep an eye on their IT infrastructure and many of these requirements translate in collection and analysis of logs, be they audit, operational or just general activity.

Paul Sarbanes and Michael G. Oxley

Organizations are starting to see potential value in aggregating and correlating logs from different sources. This in turn drives the need for normalization of logging data to avoid duplication of information (storage is still expensive), indexing of data and ability to query it through a single interface. As a consequence, many see a business opportunity to cover a market need so SIEM applications are starting to flourish. Arcsight, with its Enterprise Security Manager product, became the market leader, though many others challenged them: Check Point with Eventia, LogLogic with ST and LX appliances, eIQ Networks had SecureVue, CA owned eTrust Security Command Center, Symantec the SIM appliance, SenSage had Enterprise Security Analytics (ESA), Q1 Labs with QRadar, IBM acquired Consul and Micromuse, and Novell bought e-Security (remember Novell? I still do as I was a CNE at the time.). Where are they now? ArcSight now is owned by Micro Focus and QRadar by IBM and the others are gone or morphed into other products. Splunk was also founded in the mid 2000’s but it took some time to reach the top of the SIEM industry.

By now we can see that changes happen around the “mids”, though the evolution of technology follows an exponential curve and things may move faster than we think. With the decrease of storage cost and CPU power per $, the ability to store and process large volume of data becomes feasible. Awakening from its long winter that started in the 80s, the AI is starting to make itself relevant. “Your algorithm is as good as your data” they say about AI/ML, so having large volume of data available to train your AI is now mandatory.

Criticized for not providing enough troubleshooting and auditing information in the logs, the developers of operating systems and applications take their vengeance by flooding logs with gigabytes of data, some very cryptic, some detailed, some vague, nevertheless all using vast amount of storage. Even though the term itself was around since the ’90s, Big Data becomes “the thing” and implies dealing with volumes of data from a few dozen terabytes to actual zettabytes, with a zettabyte being 1,000 billion gigabytes.

I’ve dealt with log files for a long time and I went through the exercise of imagining how a gigabyte of log data translates into printed pages. For example, 1 Gb of logging data from a Cisco ASA firewall is the equivalent of a 4 cubic meters (that’s 141 cubic feet for those “metric” challenged) of letter sized pages printed on both sides. That’s a cube with a 1.6 meter side (or 5.2 feet)! Some of my clients, medium-sized organizations, generate 60-70 Gb of logs per day so imagine 250-300 cubic feet of printed pages to be analyzed on daily basis by the same security analysts that have to do many other things throughout the day. For this reason, AI and ML (machine learning) started to be promoted as the only way to make sense of such vast amount of data.

SIEM vendors started to promote AI as the next tireless, all-knowing, all-observing security analyst that would offload the burden of threat hunting from the humans. Any vague use of ML concepts gave the marketing departments an excuse to use the latest buzzwords and give their platforms an edge in their fight against competitors. Presentations, trade shows, blogs and articles started to show case specific instances of cybersecurity threats that could be spotted by AI/ML though they were hardly a typical example of what a regular organization may encounter. Visiting trade shows, I was trying to find a vendor that would not start their sales pitch by describing how their SIEM platforms is able to identify when a user is suddenly connecting from Russia or Ukraine…

Marketed with spectacular dashboards and the right mix of buzzwords, vendors were promoting the SIEMs as the Holy Grail of cybersecurity, able to identify attacks through the sheer power of AI/ML, allowing those that invested in such tools to both stay secure without hiring an army of security analysts.

The present seems to break away from the “mid” timeline, telling us that things start to move faster. The migration to the cloud is becoming a “rush” to the cloud, more or less organized, with entire organizations or individual business departments embracing the idea of instant deployment of resources, pay-for-what-you-use and minimal upfront costs that the cloud and SaaS platforms bring. Naturally, the SIEM platforms have to catch up with these development and many SIEM vendors started working on facilitating these migrations.

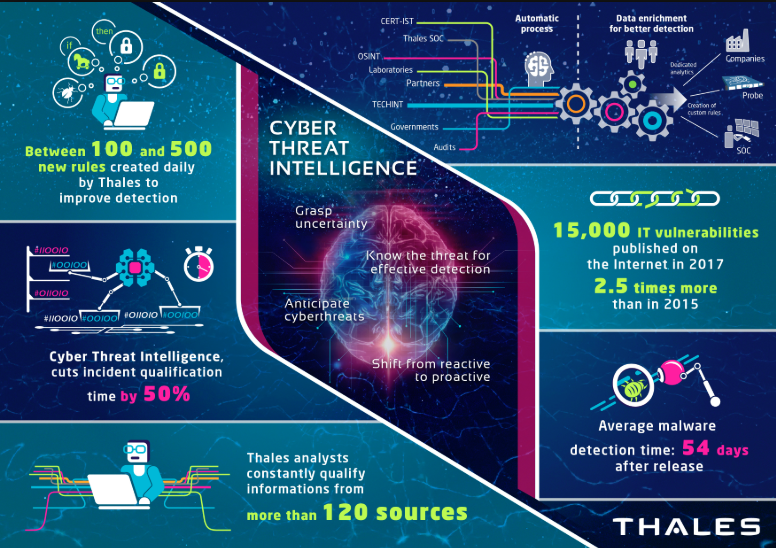

Another trend that is quite obvious in the current SIEM market is the emphasis on threat intelligence. Throughout the years, many SIEM use-cases developed around the visibility into the activity of threat actors, identified by their IP addresses, domain names and hashes of their malware. A wide number of cybersecurity vendors, non-profit organizations and just pure security enthusiasts proudly wearing their white hats, started to collect indicators of compromise (IoCs) and share them with the community at large. These collections of IoCs became threat intelligence feeds, varying in quality from just a dump of IPs that were at some point connected to malware to daily updated, curated by human analysts, subscription-based lists. SIEM platforms naturally had to provide the ability to ingest such threat intelligence feeds and provide the ability to correlate the log data against them.

Sample threat intelligence infographic from Thales Group

Another aspect that is starting to become a must have feature of any SIEM is the ability to perform a range of tasks based on the activity detected in the logs. This has been labeled as SOAR or Security Orchestration, Automation and Response. As many security controls are starting to provide interfaces and APIs for integration with external platforms, SOAR allows a SIEM to perform complex tasks such as automatically isolating from the network a computer that is perceived as being compromised.

Finally, through the effort of deploying more AI/ML-driven capabilities, many SIEM vendors realized that with the right data available, these systems are able to start tracking every user or entity (that is anything that has an IP or some kind of unique identifier) and build a baseline for their “normal” activity and alert when something unusual happens. SIEMs providing UBA or UEBA capabilities (that is User Behaviour Analytics extended to User and Entity Behaviour Analytics) are able now to model the behavior of both humans and machines within a network.

A trend that is becoming visible today is the fact that the SIEM vendors are no longer presenting their products as virtual security analysts. Instead, realizing that the promised AI/ML capabilities have yet to deliver, the SIEMs are now promoted as powerful tools for human security analysts, increasing their productivity by providing quick access to relevant data. According to the industry stats, a security analyst is able to process 10 to 12 security incidents per day. Some organizations have thousands of such events on daily basis so if a tool is able to double the productivity of a human analyst, then such tool is obviously of great help and probably worth getting it.

More and more organizations that invested significant money into SIEM in the mid 2010s are starting now to look into the promised ROI and senior managers don’t like what they see. The SIEM industry is bound the enter a new era where they have to deliver value for the $ invested in them.

* * *

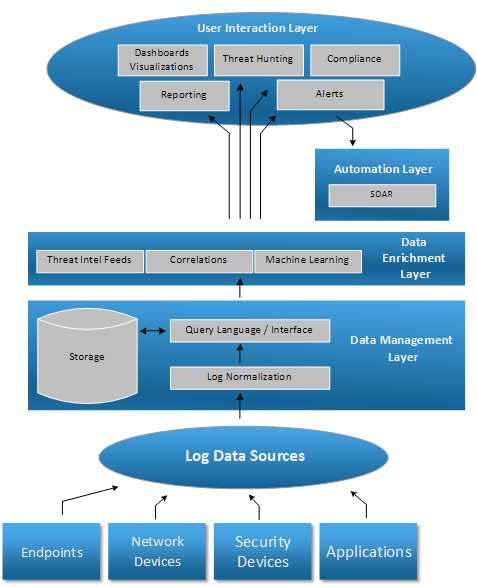

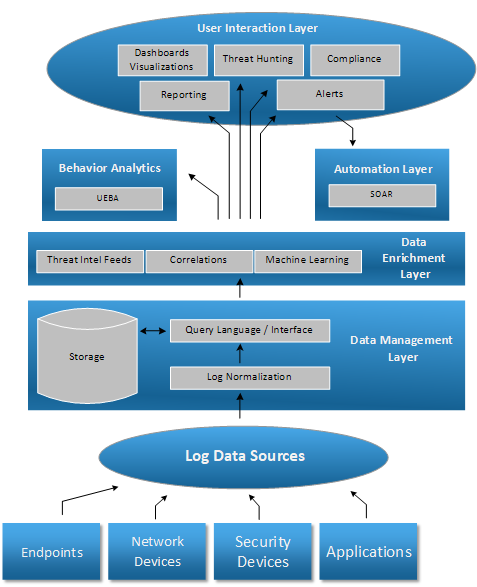

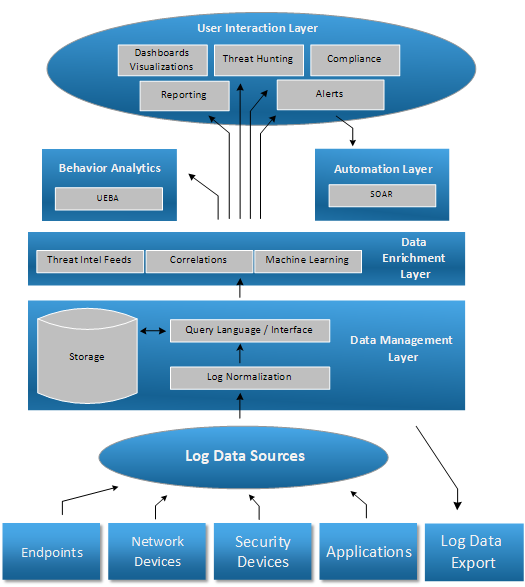

After many years of development, with various solutions redefining what a SIEM is, the industry has come to a point where in order to be called a SIEM, certain characteristics and capabilities must be present.

So what are the expectations from a SIEM product? Let’s start from the ground up and identify the features of a SIEM.

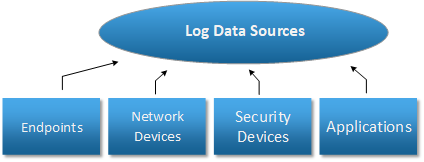

Log and event collection

A SIEM needs to able to collect data from various endpoints (Windows and Linux servers and workstations), network devices such as switches, routers and load balancers, security devices such as firewalls, IPS/IDS, VPN, URL filtering, and so on as well as applications such as SQL servers, any any type of application that is able to produce a log that can be used for security and troubleshooting purposes.

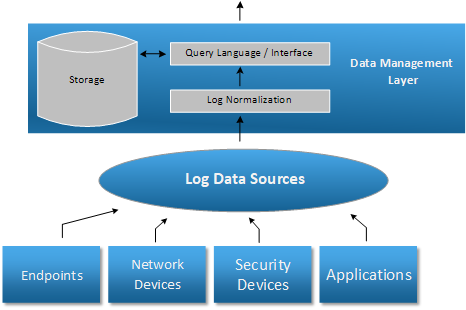

Data management

The log data has to be stored for processing and retention, with the ability to query it using complex filtering and aggregation functions, normalizing it to remove duplication and increase query performance. The storage component directly affects the cost of a SIEM solution as the volume of data can be quite high.

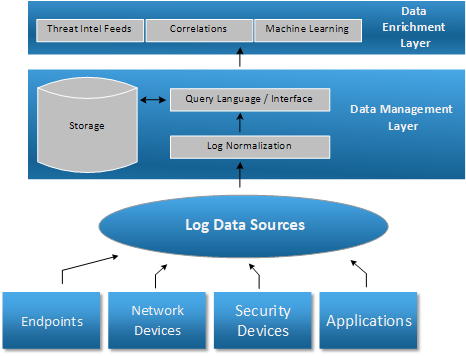

Data Enrichment

Once the SIEM logging data is extracted through queries, the Data Enrichment layer provided by a modern SIEM should be able to enrich it with additional information such as geolocation, correlation with threat intelligence feeds, insights obtained from Machine Learning algorithms and most importantly, information obtained from the correlation of data between logs from multiple security controls. This is where 1 + 1 becomes 3.

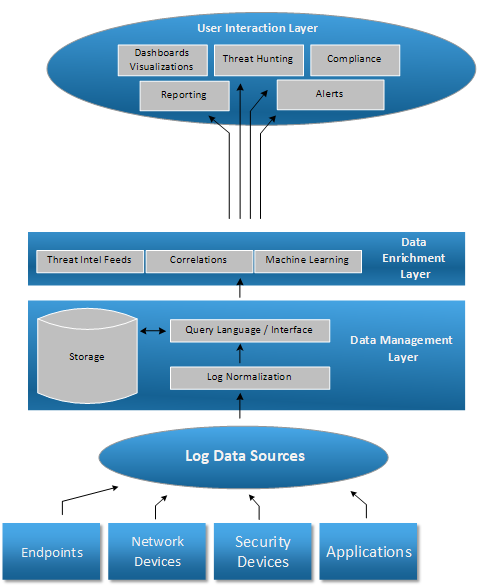

User Interaction Layer

The User Interaction Layer provides visibility into the data extracted from the storage and enriched with additional information. There are various ways to interact with users and most SIEM platforms take advantage of them:

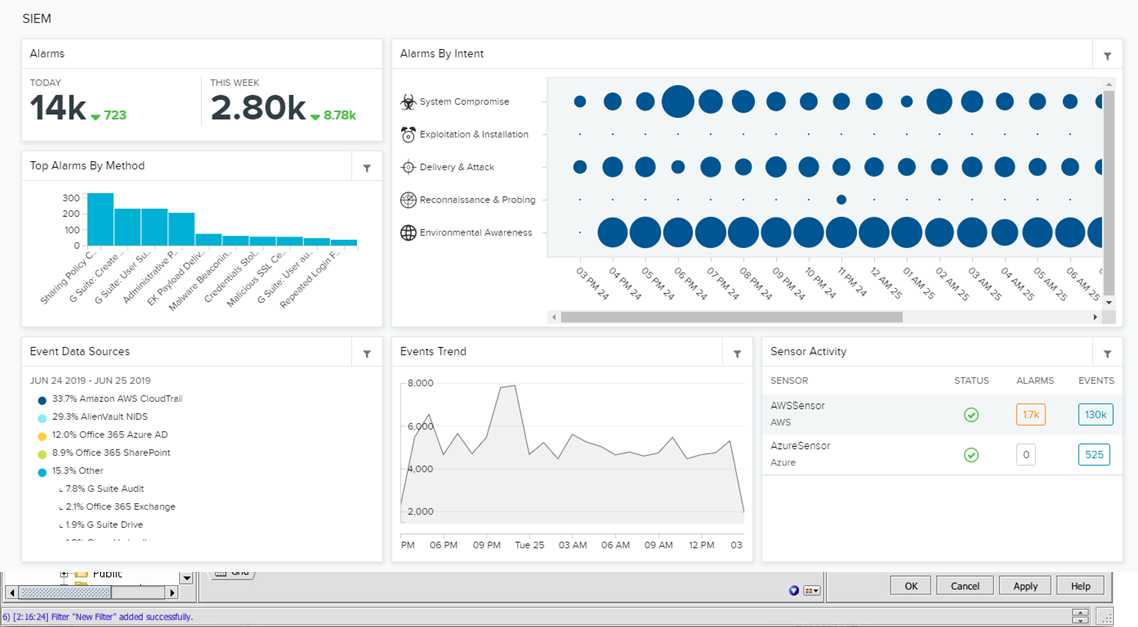

– Dashboard

– Reports (such as daily report with top web users)

– Threat Hunting – Interface that allows searches for specific suspicious events, drill-downs, annotations, team-work, etc.

– Compliance – Pre-built reports tailored to match logging and reporting requirements for regulations such as PCI, HIPAA, etc.

– Alerts – Notifications and playbooks triggered by specific conditions, defined by use-cases identified by security analysts

While many are attracted by Hollywood-style dashboards in the beginning, the real work horse of a SIEM is the alerting capability, followed by threat hunting. With the exception of some large security operation centers (SOCs) that may have wide displays with real-time dashboards, security operators don’t have the luxury to stare at some dashboards, waiting for some sort of outlier to bring them into action. Instead, they rely on alerts delivered by email or text that would allow them to act on identified threats right away.

Automation Layer

The automation layer is the one dealing with SOAR. Any modern SIEM should provide options to integrate with IT operations tools such as ServiceNow, Remedy, as well as a range of standard interfaces to communicate with such 3rd party tools.

Behaviour Analytics

Many SIEMs are starting to include a behaviour analytics component, the UBA/UEBA functionality described above. This is perfectly tailored for machine learning so we can expect to see it in most modern SIEM platforms.

Log Data Export

Finally, a SIEM platform is expected to be able to make the log data available to 3rd party tools, could it be a more specialized analytical platform or auditors that bring their own tools and require a sample of the logged data.

* * *

Now that we know how a SIEM evolved and what a modern SIEM should provide, who are the main competitors in the SIEM market? For such information we typically refer to Gartner’s Magic Quadrant. For 2018, the SIEM vendors that were identified as competing in the SIEM area are as follows:

Splunk (their Enterprise Security product) leads the way, followed by IBM’s QRadar and LogRhythm. Traditional platforms such as ArcSight (owned now by Micro Focus) seem to be losing traction. This chart may soon change as we will see in the following sections.

* * *

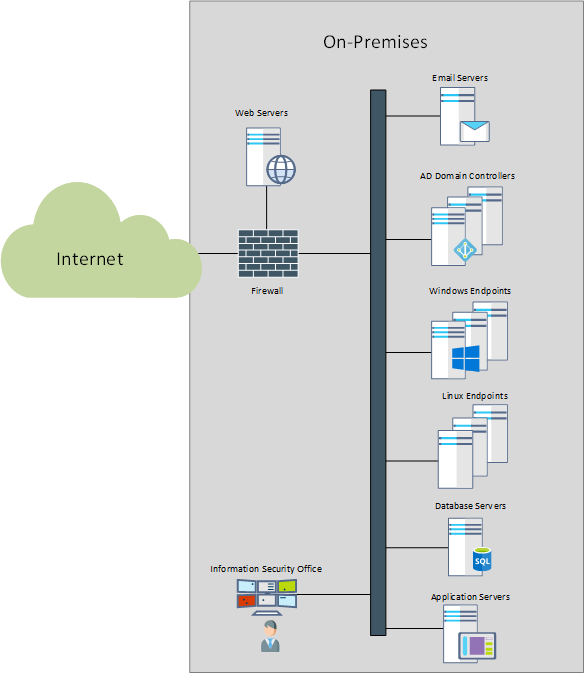

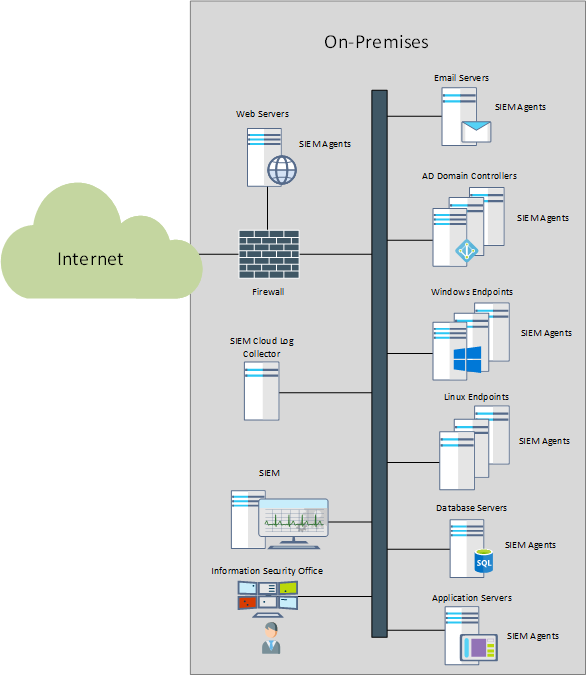

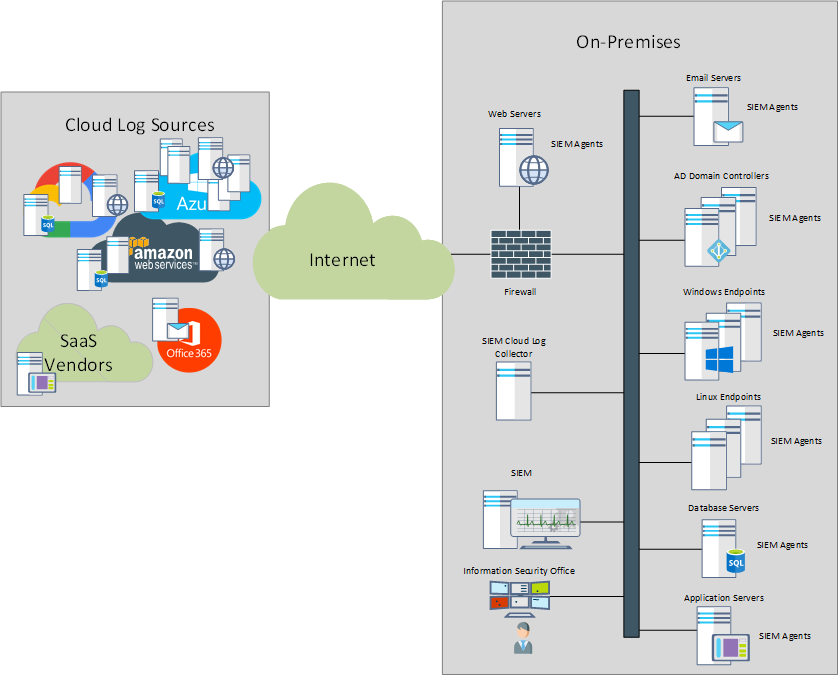

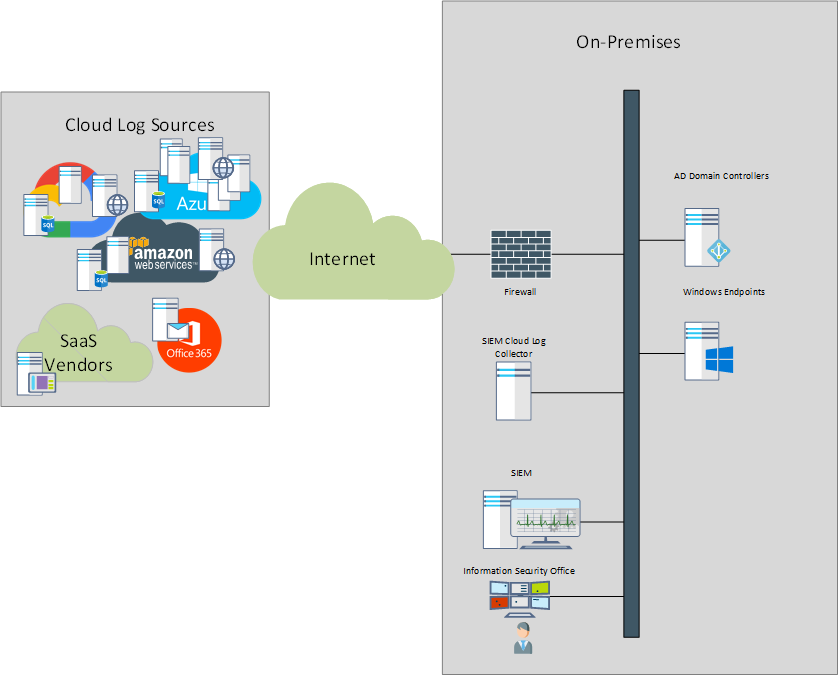

Let’s look at the traditional deployment of a SIEM platform. Until recently, the typical organization had all its resources, including endpoints (workstations, servers), network and security appliances and application servers, all on premises, protected by a layer of firewalls and other security controls. Web servers that provided content for external users, were hosted in DMZs, segregated from the internal network and typically with additional security controls.

For such environments, the natural place of a SIEM was just along most log sources, with SIEM agents deployed to endpoints that lacked built-in logging capabilities to 3rd parties (such as Windows servers and workstations). For the devices, appliances and applications that had reporting options such as syslog, the SIEM is typically able to ingest the logs by acting as a syslog server.

This model worked fine for a few years, however, a disruptor, aka the “cloud” started to change things.

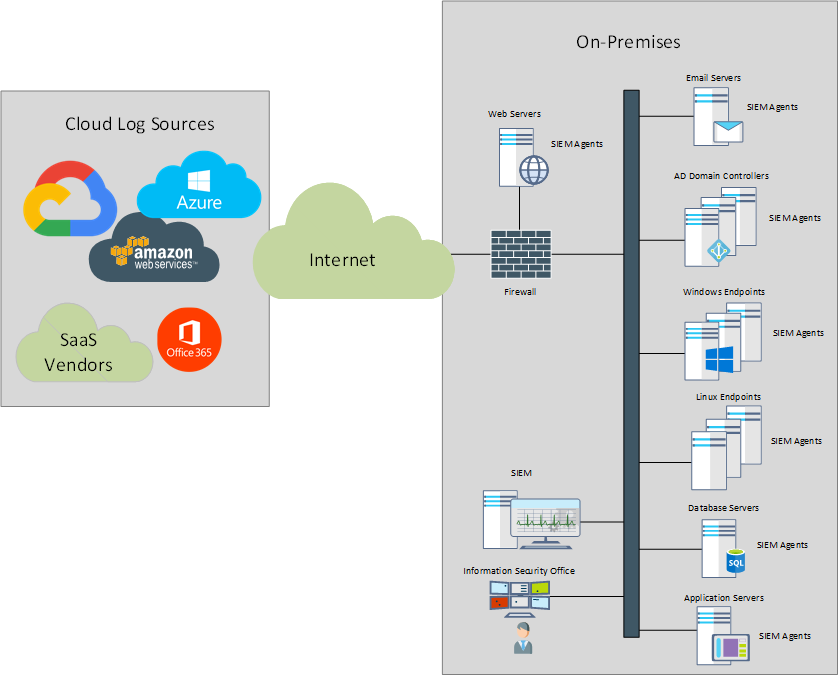

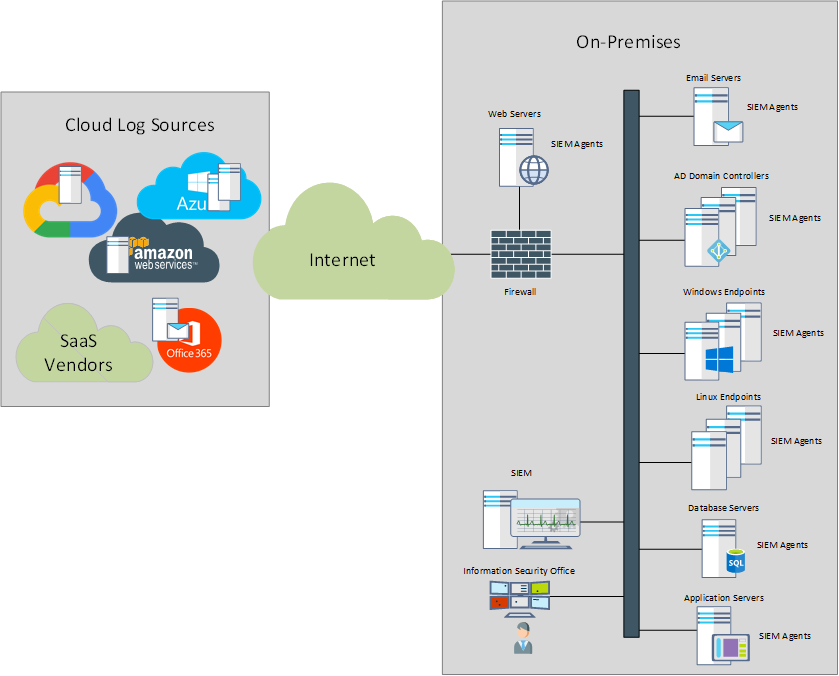

Driven by the ability to deploy resources with a click of the mouse, limit capital expenses and provision capacity on demand, various business groups within organizations started to take advantage of it.

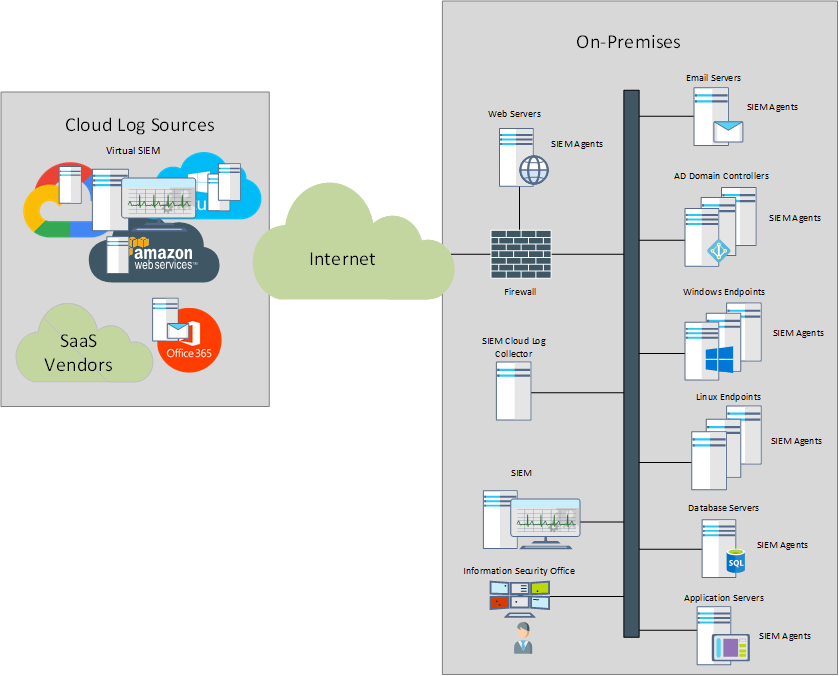

Lacking the ability to reach the on-prem SIEM, the cloud-hosted resources were (and still are) typically ignored or deemed “protected” by the cloud provider. The SIEM vendors adapted by providing specialized cloud collectors, that would have the ability to connect to cloud-based resources, retrieve the logging data and forward it to the on-prem SIEM. Most SIEM vendors would also provide virtualized instances of their appliances to be deployed in the cloud, right next to the resources running in the cloud.

This type of deployment, that we can consider “traditional” has specific challenges, some related to SIEM in general, others related to their ability to deal with the hybrid infrastructure that characterizes many organizations these days. Let’s look at these challenges and describe the actual problem.

1. Initial Costs

SIEM appliances deal with a large volume of data and they need to parse, index and retrieve this data on demand and do it fast. This require hardware resources that make almost any SIEM platform a very expensive one. When purchasing a SIEM, one needs to make sure that its capacity is good for at least 5 years and be able to cover the constant growth of logging data. Assuming a 25% annual growth, if the current logging volume is around 10 Gb per day, within 5 years, the SIEM appliance would need to support almost 35 Gb per day.Deploying a SIEM appliance, configuring the log sources and the initial use-cases can add-up to significant professional services fees, easily reaching $30,000-$50,000. In addition to the initial cost of the appliance and its deployment, there maintenance contracts and licenses. Due to the high costs, deploying a SIEM is prohibitive for smaller organizations.

2. Use-cases

While most SIEM platforms come a number of built-in use cases, their value is not that great as they have to cover a generic situation. Since each organization is unique, the use-cases need to be customized based on the existing logging data and security policies that need to be followed. The engineers deploying the SIEM may assist with the initial set of use-cases but without proper tuning, they tend to become obsolete and end up generating some reports or alerts that nobody pays attention to. An organization that is serious about using the SIEM to its full capacity has to implement a use-case development life cycle that ensures that each use-case is regularly reviewed, adjusted or decommissioned if no longer relevant. Many information security administrators hesitate to remove alerts and reports even though they don’t really get actionable intelligence from them.

3. Skills

Deployment and managing a SIEM require a mix of skills that is not easily found. SIEM engineers need to understand the required capacity (and as discussed above, this needs to cover the logging needs for at least few years), the logging sources and the type of data they log, have good programming skills for both building log parsers as well as script the use cases, good knowledge of security in order to determine what type of use cases should be deployed and what data to use in order to implement them. People can be trained and most vendors provide training options, but given the lack of skills on the market, the risk of losing your engineers to an organizations that provides better pay is a harsh reality for most companies.

4. Cloud

As we discussed in the previous section, the traditional SIEM requires some additional components in order to gain visibility into the logging data generated by cloud applications. In practice, many cloud-hosted applications end up being ignored from a logging perspective, assuming that just by being hosted in the cloud they are more secure though many forget that there is no cloud, it’s just someone else’s computer.

5. Data Volume

Based on Cisco’s statistics, the Internet traffic is increasing yearly by 21% and we can expect that the volume of the logging data will follow the same pattern. This means that if an organization is currently generating 10 Gb per day, the volume will get around 30 Gb per day in 5 years. When purchasing a SIEM appliance, one needs to make sure that it will cover the business needs for at least 5 years, the normal life cycle for many hardware-based appliances. It is difficult to find budget to upgrade appliances every other year. So from the start, one needs to pay for capacity and license to cover 3 x the current volume. These are both capital and operational costs that add up to a significant number.

6. Operating Costs

The costs of deploying a SIEM don’t end up when the appliance is up and running. These devices need monitoring, maintenance, upgrades and troubleshooting and these tasks require skilled personnel. It is not unusual to have several engineers fully dedicated to the day-to-day operation of a SIEM infrastructure.

Even with all these challenges, according to Research and Markets, SIEMs and related technologies were a $5.3 billion market in 2018, and the market is expected to growth at a compound annual growth rate of 19.7 percent – to $12.9 billion by 2023. SIEMs are the fastest-growing segment of the market.

* * *

Even though major cloud services like AWS, Azure and Google Cloud Services where released before 2010, they didn’t started to become an important part in the IT infrastructure until the mid 2010s. As we have seen above, the traditional SIEM deployment more or less ignored the cloud infrastructure or dealt with it as an afterthought. However, as organizations discovered the benefits of the cloud and the costs of Internet connectivity dropped, more and more servers and applications started to move to the cloud.

With the migration of infrastructure, the on-premises infrastructure is starting to diminish, with some organizations maintaining the minimum necessary to allow employees to authenticate and access the Internet securely. We might not be 100% there yet, but the trend is quite visible and at some point even the desktops might become virtualized in the cloud (as it is the case with Azure’s Windows Virtual Desktop).

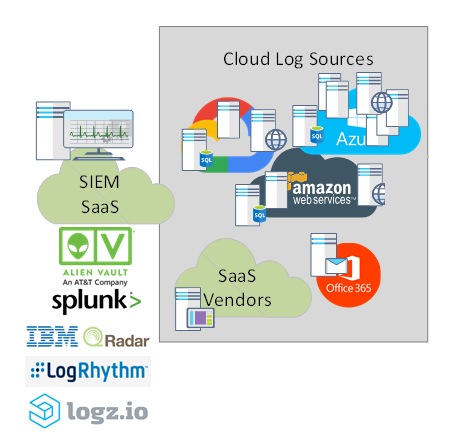

Most SIEM vendors identified the trend well in advanced and followed it by offering their appliances as services on most cloud services as well as building their own cloud and offering SIEM as a cloud service. The on-prem cloud log collectors became the on-prem log collector that sends the on-prem logs to the cloud.

With the move of the SIEM to a SaaS model, all the cloud-related advantages are now applicable to SIEM platforms:

1. Cost Savings

By using cloud infrastructure and its pay-as-you-go system, the large initial investments in a SIEM platform are no longer a factor and an organization can start with minimal investment and increase as needed. Most cloud-based deployments are also incurring less professional services fees as the SIEM cloud vendors tend to standardized the onboarding process and offer the services of their engineers free of charge. The traditional 5-year replacement cycle and the corresponding costs are no longer an issue.

2. Maintenance

Many on-prem solution tend to fall behind the vendor updates and for a good reason. Any upgrade, especially major upgrades require special planing, testing, training, potentially additional upgrade costs and are always at risk of failure. A cloud-based solution will always be at the “latest version” and all the due diligence work is done by the SaaS provider.

3. Accessibility

As most SaaS platforms make their interfaces available from any location that has Internet access, organizations can now take advantage of workforce mobility and allow them to manage the infrastructure from almost any location.

4. Reliability

Cloud also reduces costs related to downtime. While not infallible, downtime is rare in cloud/SaaS systems.

Most of the major SIEM vendors are now providing a cloud-based version of their product, with very competitive pricing and sometimes with a “free” tier that can encourage smaller organizations to deploy a SIEM.

SIEM vendors with a SaaS offering (in alphabetical order): AlienVault USM, Exabeam, LogRhythm, Logz.io (based on Elastic Stack) IBM QRadar, Splunk, SumoLogic and others.

While SIEM SaaS allows organizations to send their logs to their cloud, not everybody is comfortable or allowed (due to local legislation on data sovereignty) to do so. Others simply don’t trust cloud platforms that much. For such potential customers, MSSPs developed SIEM offering based on private-clouds that are designed to comply with most of the restrictions that such customers may have. Typically, large MSSPs would adopt one of the main SIEM platforms such as Splunk, LogRhythm or ArcSight. One example in Canada is TELUS that offers SIEM hosted on its private cloud based on LogRhythm platform.

With all the advantages offered by SaaS SIEM, a significant development happened at the beginning of 2019 that may have a big influence on the SIEM market. Both Microsoft and Google announced their own cloud-based SIEM products, Azure Sentinel and Backstory. While technically not Google itself released the product but the sister company Chronicle (both owned by Alphabet), it is based nevertheless on Google’s cloud infrastructure. In fact, on June 27th, 2019, Google Cloud CEO, Thomas Kurian, announced that Chronicle would be absorbed into Google Cloud.

Amazon also appears to be getting closer in releasing a SIEM service as they recently made public the AWS Security Hub, not an actual SIEM but an aggregator of security information from multiple service as well as a compliance monitoring product. It is very likely that we will see a fully-fledged SIEM product from Amazon in a not-too-distant future.

Let’s see now why this is an important milestone for the SIEM industry and what it means for the incumbents the sudden intrusion into their territory from major cloud providers such as Microsoft and Google.

Cloud-Born

The SIEMs from Microsoft and Chronicle where designed from the scratch for the cloud, in fact they are built from mature cloud services that have been on the market for a while. These are not products back-engineered to adjust to cloud requirements and overall concepts. When the traditional SIEMs were designed, certain choices were made by their developers, engineering choice that affect the core of the product functionality. At the time, these products were on-prem applications so even a SaaS “transformation” is still subject to that initial design. While this may not be clearly visible to a consumer, it does affect their functionality and the ability to extend to a true cloud environment.

Cloud Engineers

Major cloud providers have access to a real army of cloud engineers with expertise and resources that cannot be matched by a SIEM vendor. All SIEM vendors building their SaaS platform have to either use one of the major cloud providers or (re)invent the cloud service themselves and keep up with all the developments in cloud computing. Even the largest SIEM vendors would have a problem matching this type of expertise. In time, the difference will become visible and may affect the competitiveness of the traditional SIEM vendors.

Visibility

Major cloud providers have extensive visibility into their infrastructure, with vast amount of data available for AI/ML algorithms to identify potential threats. Both Microsoft and Chronicle are aggressively advertising their ability to correlate data from environments and assist their customers by providing access to threat information. While SIEM SaaS platforms also have potential access to large sets of data, they are nowhere close to what clouds like Azure, Google or AWS have.

Resources

The last but not the least, major cloud providers have access to resources that are way over what even a very successful SIEM vendor like Splunk or even IBM have. They can afford to allocate highly skilled engineers and support their efforts in developing their SIEM offering without making a dent in their budgets. While not necessarily a guarantee of success, having such resources available can make a big difference in the race to the top of the market.

* * *

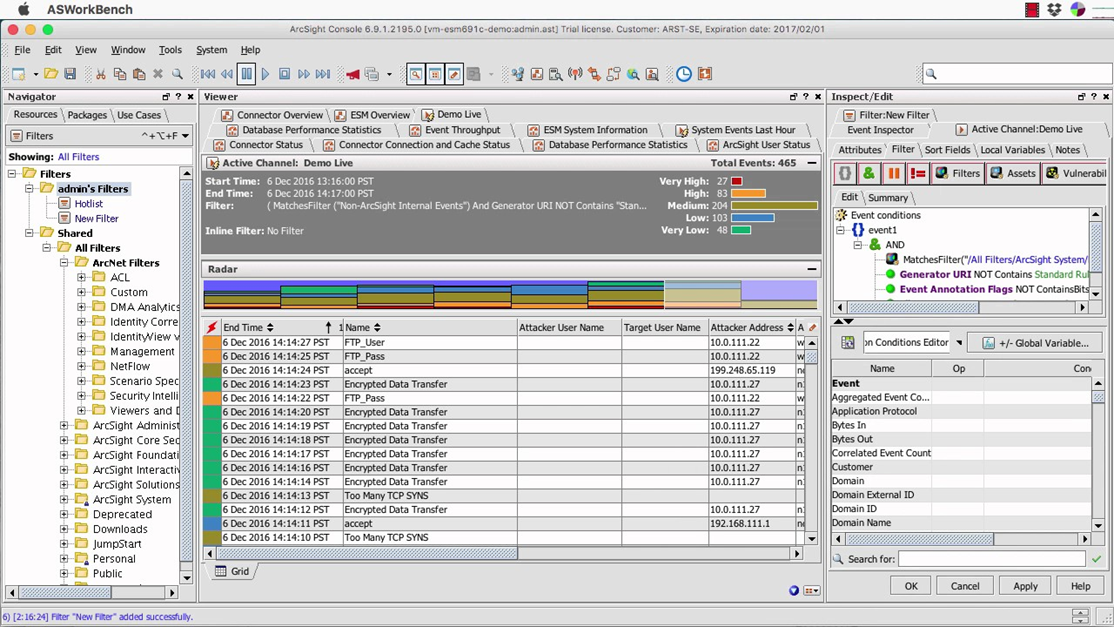

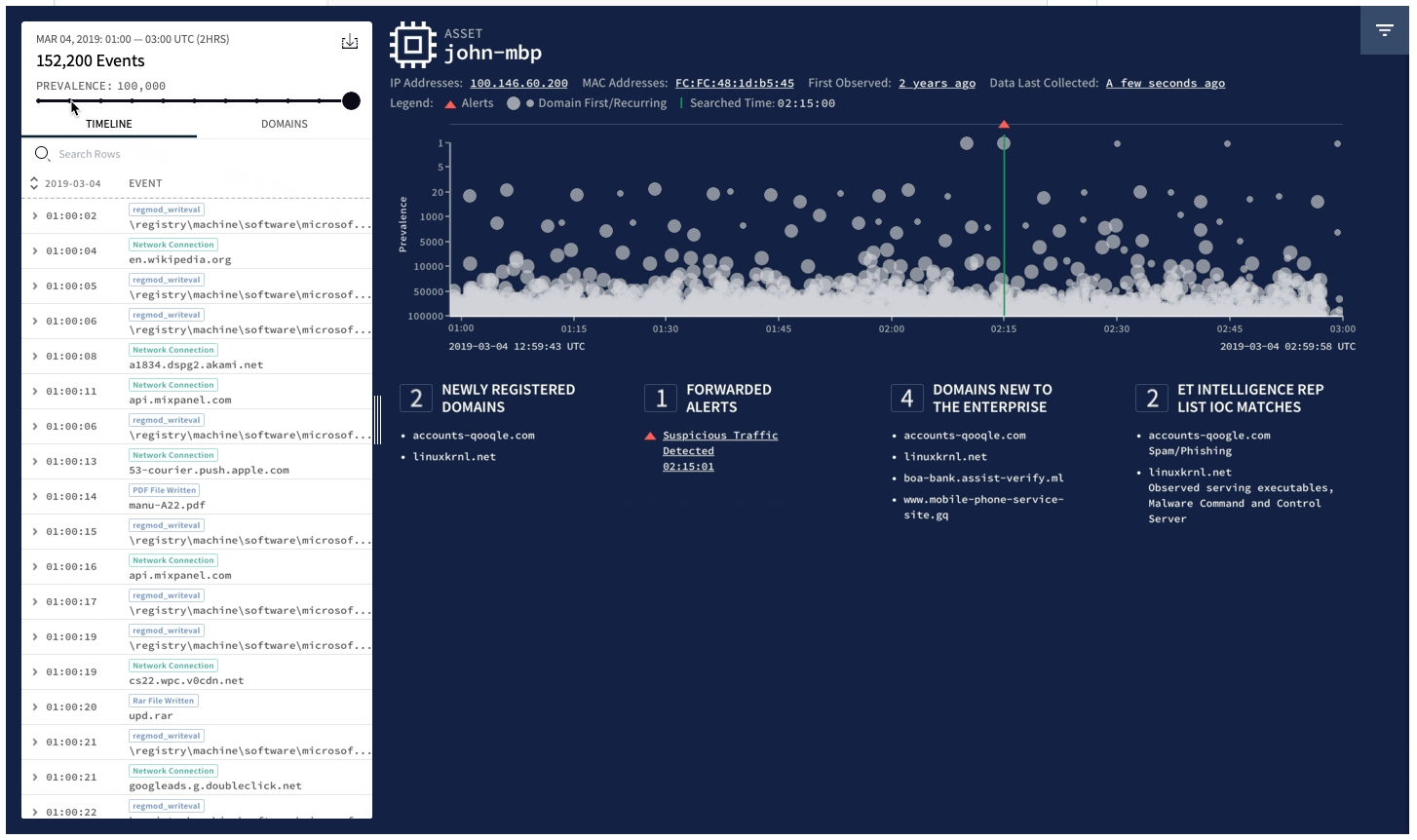

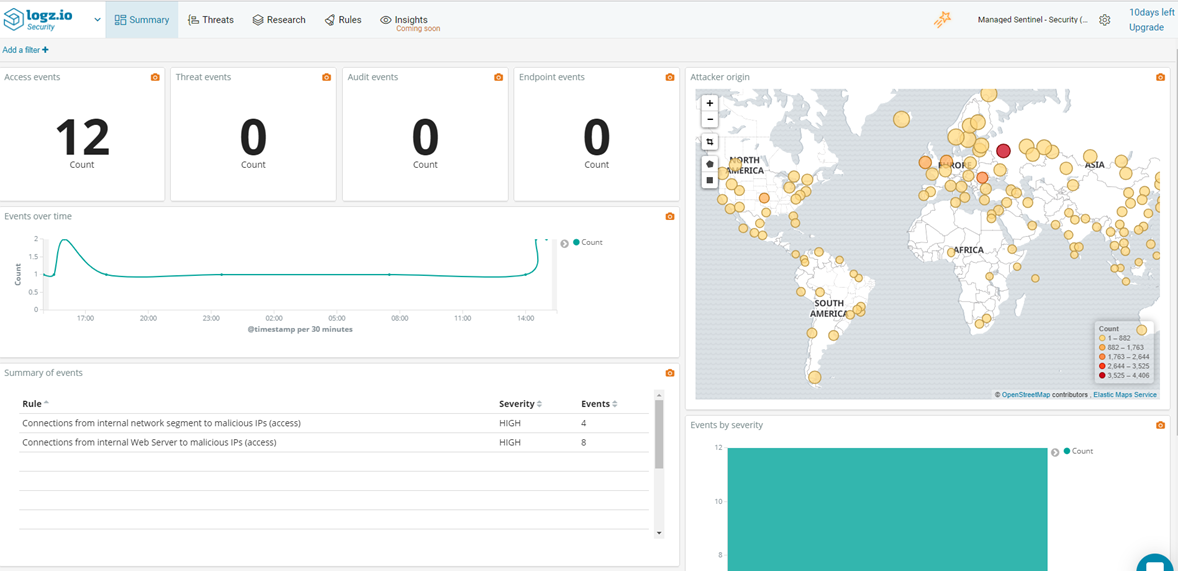

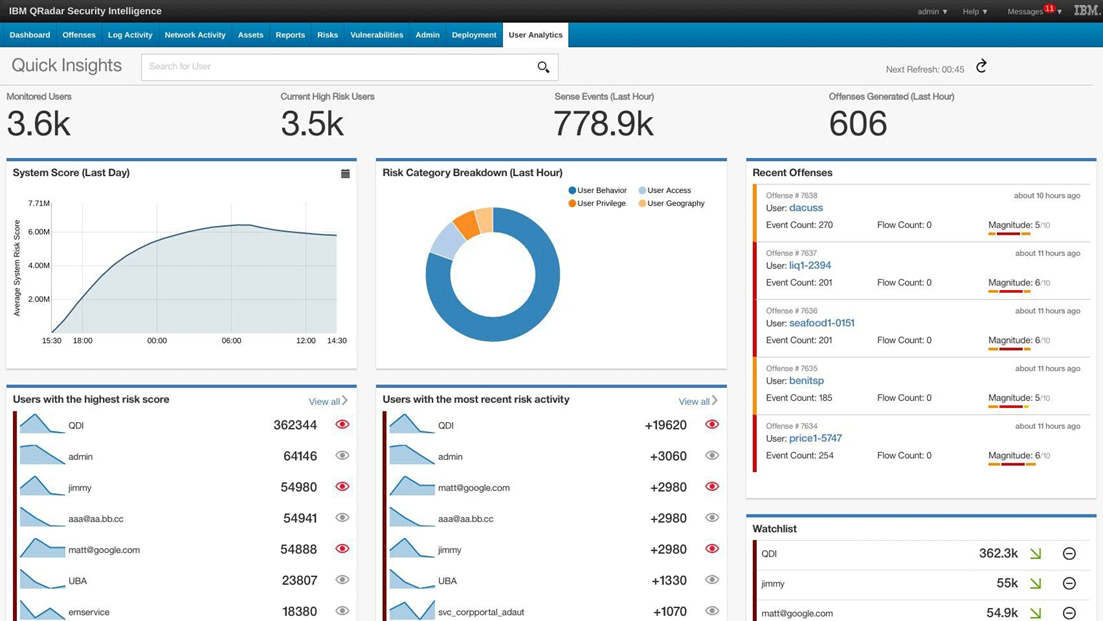

Screenshots from various SIEM platforms (in alphabetical order):

AT&T AlienVault USM

Micro Focus ArcSight ESM

Chronicle Backstory

LogRhythm

Logz.io

IBM QRadar

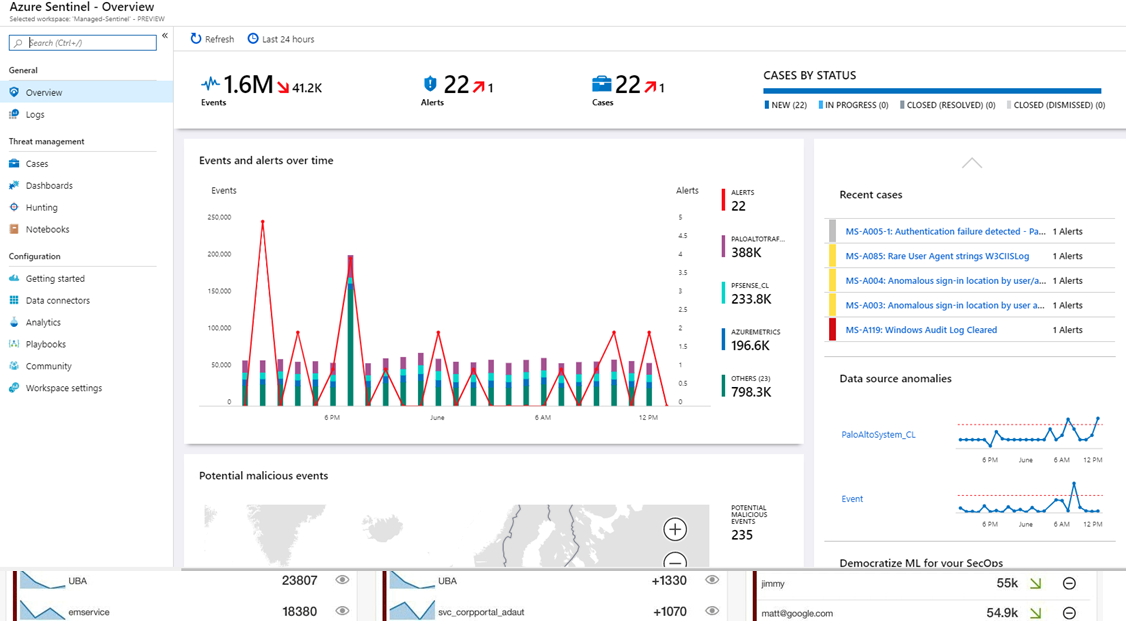

Microsoft Azure Sentinel

Splunk Enterprise Security